What Is the Ollama API?

Ollama is an MIT-licensed server that runs open-weight language models entirely on your own machine.

Because every request stays on-device, no text or metadata ever leaves your computer — ideal for confidential or regulated documents.

Your only costs are the graphics card (if you do not already have one) and the electricity your hardware consumes; the software and the models listed below are free.

There are therefore no per-token fees, rate limits or mandatory cloud accounts.

Step-by-Step Guide

The instructions below assume you have administrator rights on the host PC.

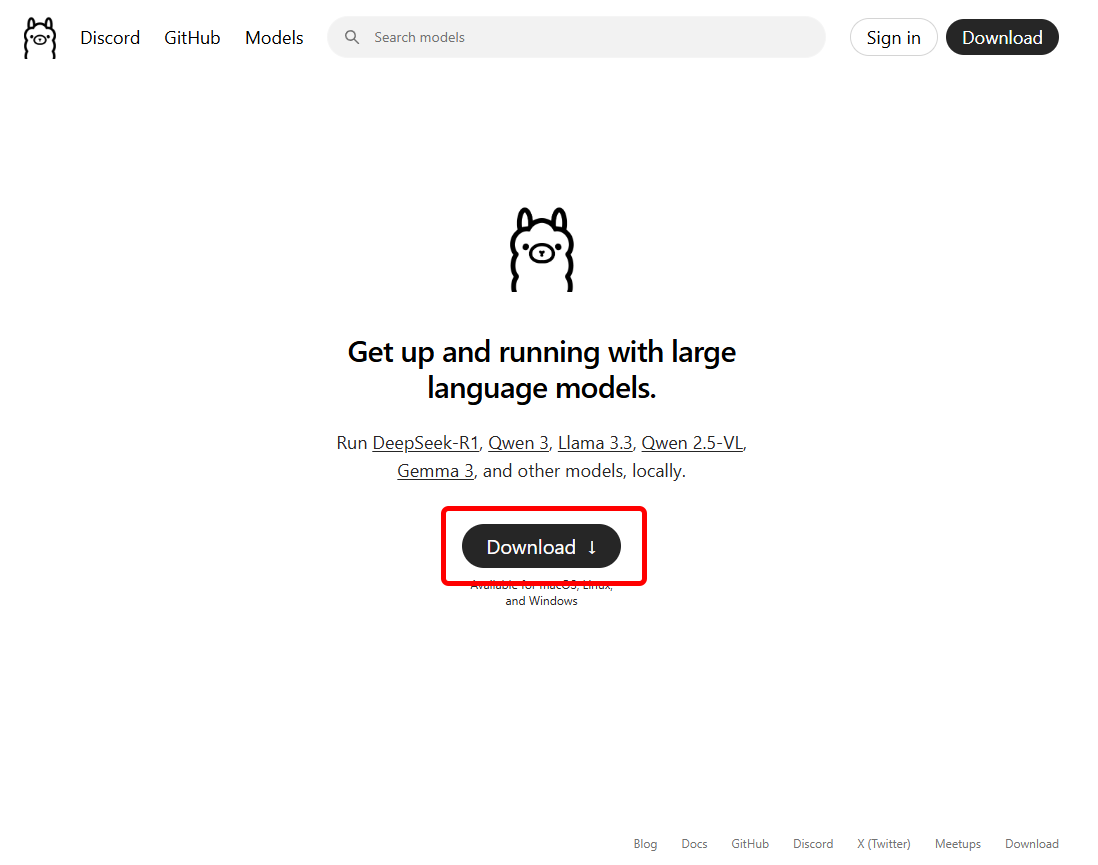

1. Install Ollama

- Download the Windows installer from the Ollama homepage and click Download.

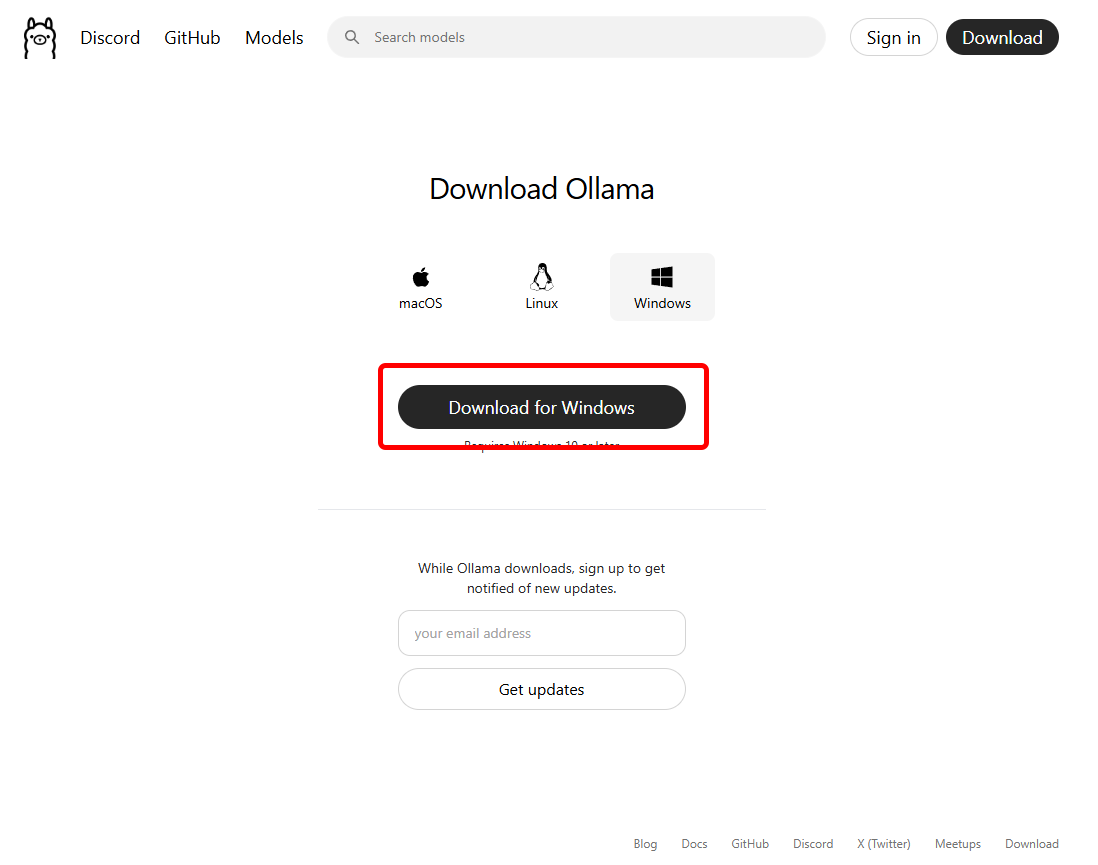

- Click Download for Windows.

- Run the downloaded OllamaSetup.exe.

After installation, Ollama starts a background service listening on http://localhost:11434.

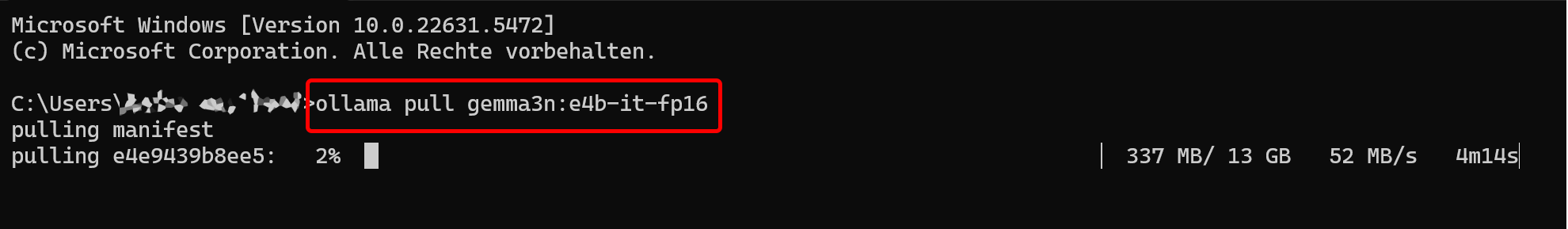

2. Download a Model

Open a terminal (or PowerShell) and pull one or more models: If you have a 16 GB GPU, try some these models to see which works best for you:

ollama pull mistral-small3.2:24b-instruct-2506-q4_K_M

ollama pull deepseek-r1:32b-qwen-distill-q4_K_M

ollama pull llama3.1:8b-instruct-q6_K

ollama pull gemma3:12b-it-q8_0

ollama pull mistral-nemo:12b-instruct-2407-q6_K

ollama pull mistral:7b-instruct-v0.3-fp16

ollama pull phi4:14b-q4_K_M

If you have a 24 GB (or larger) GPU, you can try instead:

ollama pull mistral-small3.2:24b-instruct-2506-q4_K_M

ollama pull deepseek-r1:32b-qwen-distill-q4_K_M

ollama pull llama3.1:8b-instruct-fp16

ollama pull mixtral:8x7b-instruct-v0.1-q3_K_M

ollama pull gemma3:27b-it-q4_K_M

ollama pull phi4:14b-q4_K_M

ollama pull mistral-nemo:12b-instruct-2407-q6_K

ollama pull mistral:7b-instruct-v0.3-fp16

Each command downloads the weights once and stores them under ~/.ollama/models/. Expect 8–20 GB per model, depending on size and quantisation level.

3. Start the Service Manually (Optional)

The installer registers a system service, but you can also run Ollama in the foreground:

ollama serve

You should then see:

Ollama server listening on 0.0.0.0:11434

Test your installation with:

curl http://localhost:11434/api/tags

A JSON list of local models confirms everything is working.

4. Recommended Hardware

- GPU: 16 GB VRAM (Nvidia RTX 4080 / 5080) or 24 GB VRAM (RTX 3090, 4090 or 5090)

- System RAM: 16 GB or more

- Storage: SSD with at least 20 GB free space — more if you keep many models

Ollama can run purely on the CPU, but responses will be much slower. Running Ollama on a non-local server (e.g. a home NAS or VPS) is possible, but securing a publicly reachable instance — TLS, authentication, firewalling — is beyond the scope of this guide.

5. Connect Panofind to Ollama

- Open Settings → Summary & Chat in Panofind.

- Tick Activate AI functionality to summarise texts or ask questions about them.

- Select Ollama (self-hosted) from the provider list.

- Leave the default endpoint http://127.0.0.1:11434/v1 or point to another host on your network.

- Click Save. The Summarise and Chat buttons will now appear in supported documents.

Even with a dedicated GPU, responses will generally be slower and of lower quality than those from commercial cloud services (which, of course, incur usage fees).

The first request after loading a model is slow because the weights must be transferred to the GPU. Subsequent requests are faster, but Ollama unloads an idle model after roughly four minutes; the next request will then reload it and be slower again.

You’re all set — enjoy fully private summarising and chatting with Panofind and Ollama!